Trustworthy Autonomous Vehicles Architecture

Savvy: Trustworthy Autonomous Vehicle Architecture Through Time-aware Adaptive AI Degradation

Overview

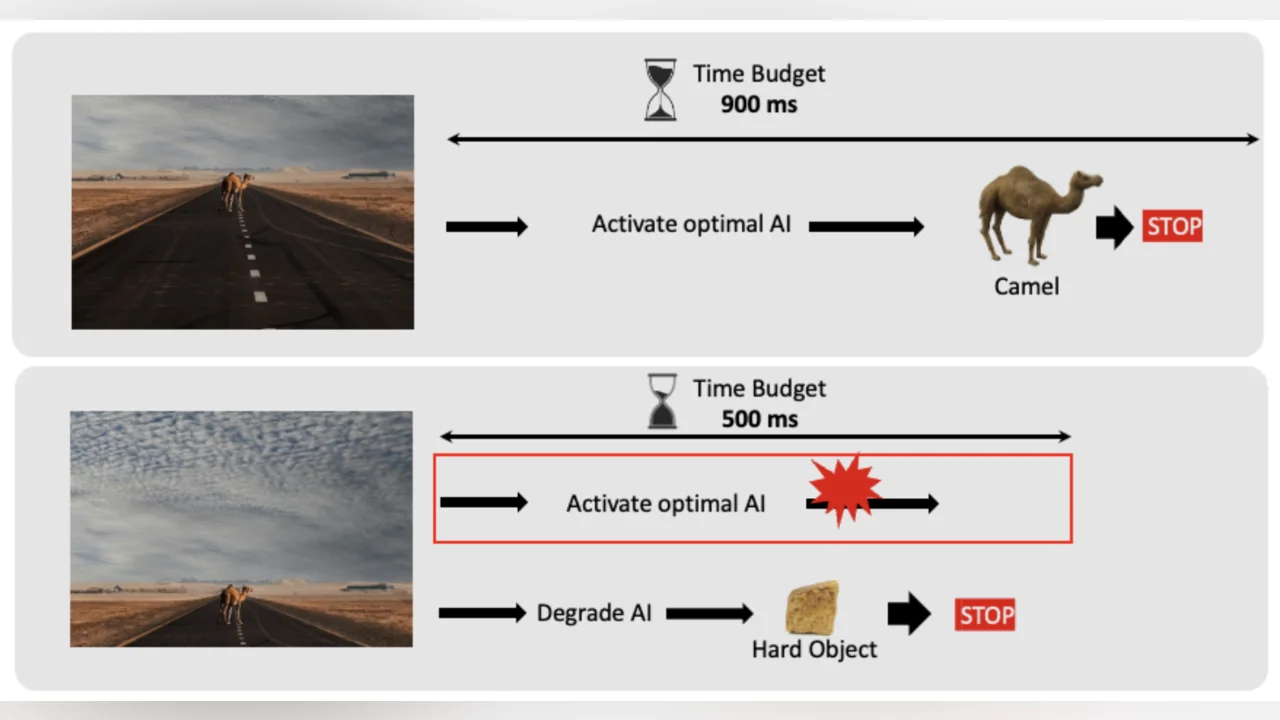

Current autonomous driving solutions still face challenges in achieving expected safety goals. Our AI-based analysis of an NHTSA dataset shows that a significant percentage of failures is due to AI systems not delivering results within the critical time window (leading to crashes or driver handovers). This motivates us to investigate the development of AI models that can deliver within time-safety bounds, even at the cost of some quality degradation, as long as the output remains “useful” for safe action. For example, see Figure 1, a timely identification of an obstacle, a Camel, as a “hard object” is more valuable than an optimal classification as an “Camel” that arrives after the safety time has expired.

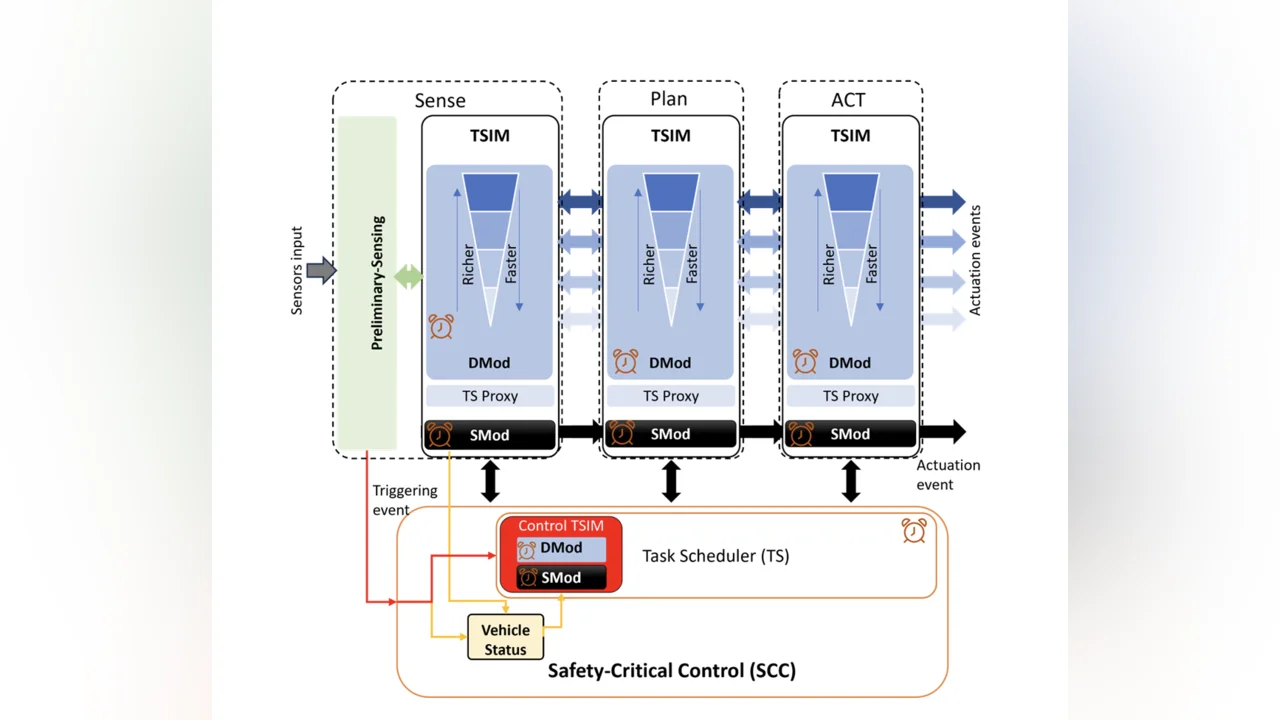

The Savvy project aims to design, implement, and evaluate a time-aware, degradation-adaptive autonomous driving system that integrates dynamic AI modules capable of tunable behavior with static fallback modules for safety-critical situations, all coordinated by a Safety-Critical Control layer with context-aware fusion and time-sensitive scheduling. The research will explore multiple AI strategies, including early-exit models, anytime inference, multi-exit deep neural networks, reinforcement learning–based runtime model selection, knowledge distillation ensembles, lightweight model compression/pruning methods, and adaptive sensor fusion. Accordingly, we will refine the initial system architecture (in Figure 2) as needed.